Identifying AI Slop in Writing: 11 Red Flags to Avoid (and How to Fix Each for Client Work)

Three years into using AI for paid copywriting and content projects, I’ve shipped dozens of pieces that looked fine on first read but bombed on delivery because they were stuffed with what’s now called “AI slop.”

The wake-up call came when a client asked me to revise an entire white paper I’d rushed through ChatGPT because it sounded professional but said absolutely nothing we could use. That stung, but it forced me to develop a system for catching low-quality AI-generated text before it reaches clients.

Research from Northeastern University confirms what content writers already suspected. In their paper titled “Measuring AI ‘Slop’ in Text,” the authors found that AI slop correlates with measurable text dimensions such as relevance, information density, factuality, repetition, tone issues, and coherence gaps.

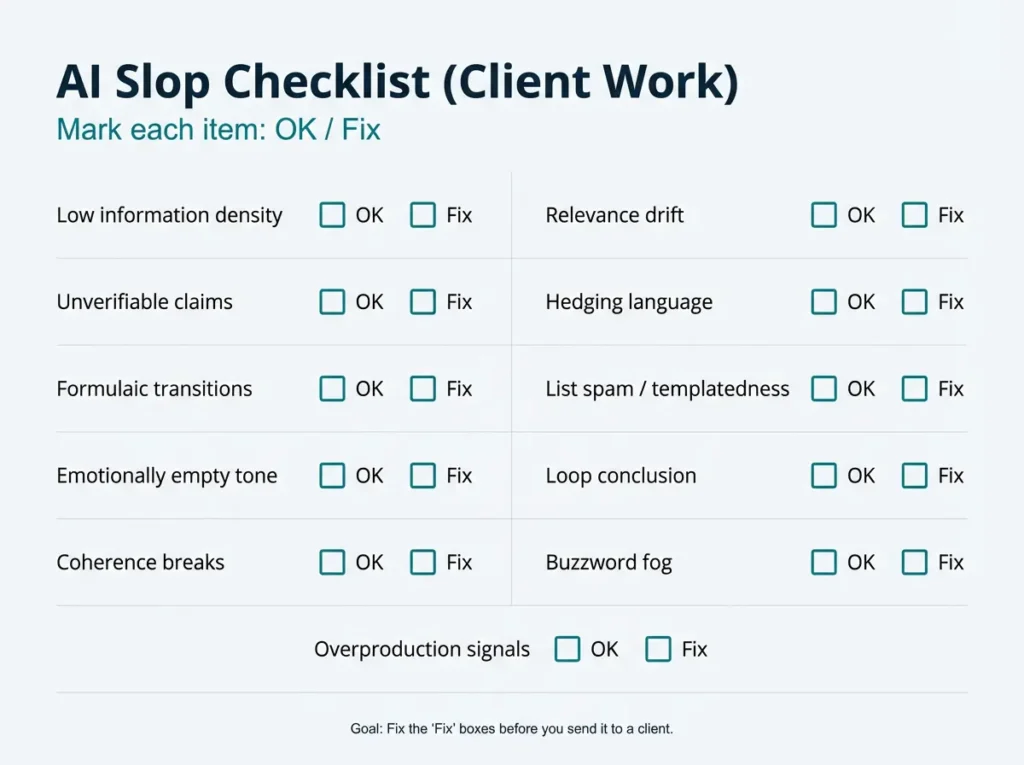

Armed with this taxonomy and months of editing trials, I built an 11-point checklist that turns risky AI drafts into client-safe copy. I’m walking you through every red flag and fix below.

How do you identify AI slop in a text?

Quick Answer: To identify AI slop in writing, check for three core quality deficits: information problems (vague statements without specifics, content that doesn’t answer the actual brief, and unverifiable claims), structural issues (formulaic transitions like “Additionally,” repetitive list patterns, and coherence breaks), and tone failures (hedging language, emotionally empty writing, and unnecessary jargon). Use the 60-second test below to catch slop fast before it reaches clients.

Key Takeaways

- AI slop text is low-quality AI-generated content marked by six measurable dimensions: density, relevance, factuality, structure, coherence, and tone, which research shows correlate strongly with professional quality judgments.

- The fastest slop detection method is the 3-to-5 test—if you can’t underline at least three concrete facts, examples, or verifiable claims in the first 200 words, the content lacks sufficient information density.

- Hallucinated claims are the silent contract-killer in client work because AI models predict plausible-sounding text patterns rather than verify factual accuracy, requiring strict “verify or remove” rules for all statistics and authoritative statements.

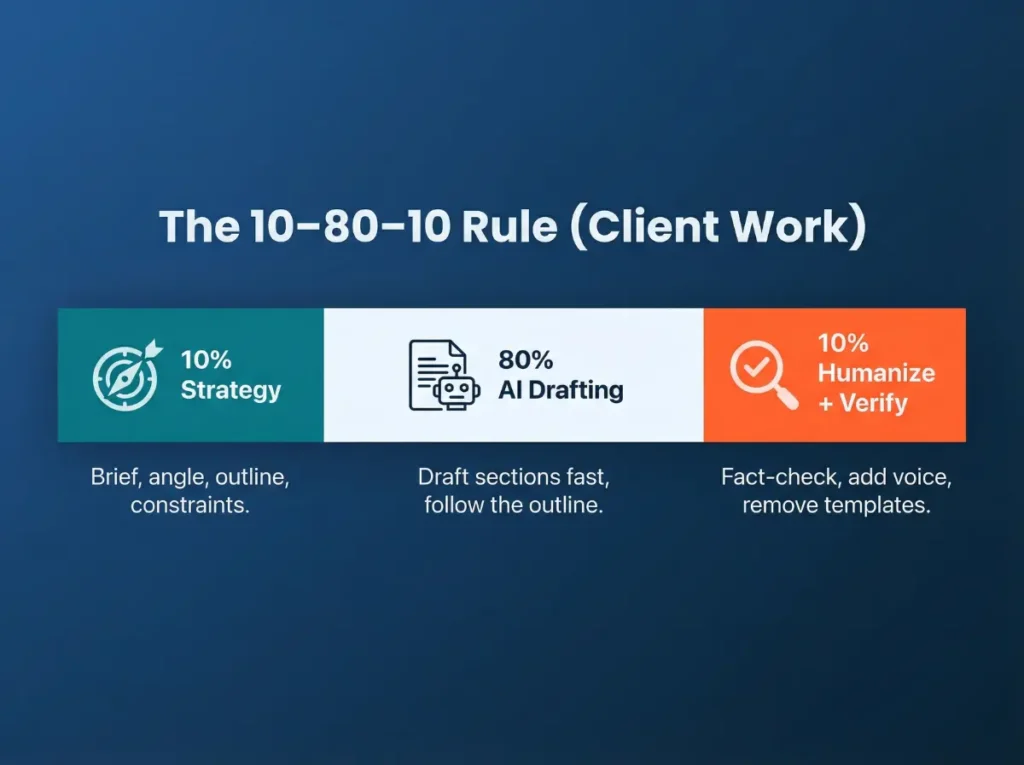

- The 10-80-10 workflow (10% human strategy, 80% AI drafting, 10% humanization and fact-checking) lets freelancers ship projects 2–3× faster while maintaining total quality control through mandatory QA gates.

- Client-safe AI writing requires replacing 20–30% of AI-generated sentences with lived experience, personal opinions, and specific examples, because authenticity and human perspective are precisely what clients pay premium rates for.

What Is AI Slop in Writing?

AI slop refers to low-quality, AI-generated text that appears grammatically correct but delivers little substantive value. It’s often generic, verbose, and easily mass-produced content that could apply to any niche with minimal tweaks.

Oxford Dictionary shortlisted “slop” as Word of the Year in 2024, defining it as material produced using a large language model, which is often viewed as being low-quality or inaccurate. The term exploded because the internet is now flooded with this technically fluent but ultimately empty content.

For freelancers and content creators using AI for writing, slop isn’t just an aesthetic problem; it’s a business risk. Clients spot it, engagement tanks, and your credibility takes the hit.

The 60-Second Client-Safe AI Slop Test

Before getting into the full checklist, here’s my fastest filter:

If I can’t underline 3–5 concrete facts, claims, or examples in the first 200 words, it’s slop. This tests for information density and relevance, which are two of the strongest predictors researchers found to be associated with sloppy text.

If the text content sounds like it could be pasted into any niche without changes, it’s slop. That’s the interchangeability test.

Use this 6-dimensional framework adapted from academic research to guide your edits:

- Density: Does it pack substantive information, or is it filler?

- Relevance: Does every paragraph answer the brief’s core question?

- Factuality: Are claims verifiable or vague?

- Structure: Is it repetitive or templated?

- Coherence: Does it flow logically, or do ideas jump randomly?

- Tone: Does it sound human, or like a corporate bot?

Now let’s break down each red flag with practical fixes.

People Also Read: My Freelance AI Writing Workflow That Doubles Output and Income

AI Slop Checklist: 11 Red Flags (Ranked by Client Impact)

1) Low Information Density (Filler That “Says Nothing”)

What it looks like:

You’ll see paragraphs filled with broad, reusable statements that sound professional but contain zero actionable details.

Here’s a classic example: “Content marketing is essential in today’s digital landscape. Businesses must adapt to changing trends to remain competitive.”

These sentences are technically true, but they’re completely worthless because they could apply to any industry or topic.

Why does it happen?

AI models are trained on vast amounts of text from across the internet, which means they default to generic patterns whenever your prompts lack specific direction. These models optimize for fluency, making text sound smooth and professional, rather than for delivering actual insights or useful information.

How to identify it:

Use a highlighter to mark every sentence in your draft. If 70% or more of those sentences could apply to absolutely any topic in your industry, your information density is too low. Research studies have shown that low information density correlates strongly with slop judgments from professional editors and writers.

How to fix it:

Go through your draft and replace 30–40% of generic sentences with specific details: actual numbers, named companies or people, concrete constraints your reader faces, or real tradeoffs they need to consider.

For example, instead of writing “Content marketing is essential,” try something like: “B2B companies with active blogs see 67% more inbound leads compared to those without blogs, based on HubSpot’s analysis of customer data.”

Notice how the revision gives your reader something they can actually use.

2) Relevance Drift (Answers Your Prompt, Not the Client’s Brief)

What it looks like:

This is content that technically addresses the writing prompt you gave the AI, but completely misses what the client actually needs to know.

I once generated what I thought was a social media strategy guide that spent 300 words explaining the history of different platforms, even though the client needed tactical posting schedules they could implement next week.

That happened because my prompt simply said “explain social media strategy” without specifying the practical application.

Why does it happen:

AI models lack real context about your reader’s job-to-be-done or their specific situation. They answer prompts literally and technically, not strategically. The AI doesn’t understand why someone is reading this piece or what decision they need to make afterward.

How to identify it:

Take your client’s brief and pull out the 3–5 must-answer questions. Then compare every paragraph in your draft to those questions. If a section doesn’t directly advance one of those answers or help the reader make their decision, you’ve got relevance drift.

Expert annotators in research studies flagged relevance as one of the top predictors of whether text qualifies as slop.

How to fix it:

Before you even start drafting, create an outline with clear “must-answer questions” listed at the top. Use those questions to structure every section. Then, once you’ve drafted, delete tangents ruthlessly, even if they’re well-written.

For my social media example, the correct outline should have been: “1) Platform selection criteria based on B2B audience, 2) Posting frequency benchmarks by channel, 3) Monthly content calendar template.” That outline would have prevented the history tangent entirely.

3) Hallucinated or Weakly Sourced Claims (The Silent Contract-Killer)

What it looks like:

These are statements that sound authoritative and confident, but have absolutely no verifiable source behind them.

You’ll see things like: “Studies show that 78% of consumers prefer personalized emails.”

The problem? Which studies? Conducted when? By whom?

These hallucinated claims are especially dangerous because they sound credible enough that readers and clients might trust them without checking.

Why does it happen?

Large language models don’t actually “know” facts in the way humans do. Instead, they generate text by predicting which words are statistically likely to come next based on their training data.

This means they’ll confidently produce plausible-sounding statistics or claims that are completely fabricated, simply because the pattern of words “fits” the context.

How to identify it:

For every claim in your draft that includes a number, trend, or authoritative statement, ask yourself: “Could I trace this back to an actual source in under 2 minutes of searching?” If the answer is no, you need to flag it.

Research has found that factuality issues reached 76% agreement among expert annotators, which means hallucinations are reliably detectable by trained human reviewers.

How to fix it:

Adopt a strict “verify or remove” rule for all factual claims in client work. This means requiring citations, direct links, or even screenshots in your workflow before anything goes to the client.

For my own projects, I now run every statistic through a quick search on Perplexity AI before I include it in the final draft. If I can’t verify the claim within 90 seconds, I either cut it entirely or rephrase it.

4) The “Hedge” (Non-Committal Writing That Avoids a Stance)

What it looks like:

You’ll notice endless qualifying phrases that dodge any concrete conclusions or recommendations. This is the hallmark of an AI-generated text slop pattern.

This kind of writing goes in circles without ever actually helping the reader decide anything.

Why does it happen?

AI models are specifically trained to avoid making controversial or definitive statements, because those could be wrong or offensive. This safety training leads them to produce safe, vague, wishy-washy language that never commits to a clear position or actionable recommendation.

How to identify it:

Do a search for commonly overused phrases like “it’s important to note,” “on the other hand,” “may potentially,” or “it’s worth considering.” If you’re seeing 3 or more of these hedge phrases per 200 words, you’ve got a serious problem with non-committal writing.

How to fix it:

Force yourself to take a clear point of view and back it up with reasoning. Add specific recommendations that include conditions or context.

For example, “When your product costs over $500, you should prioritize long-form educational content over quick social media posts because high-consideration buyers need depth and detail to justify the investment.”

Notice how that gives the reader a clear decision rule instead of vague possibilities to consider.

5) Formulaic Transitions + Repetitive Scaffolding

What it looks like:

This shows up as a relentless use of the same transition words, such as “Additionally,” “Furthermore,” “Moreover,” and “In conclusion,” paired with paragraphs that all follow identical rhythms and patterns. Experienced copywriters report this as an instant tell that they’re reading AI-generated text.

Why does it happen?

Modern LLMs favor what are called “syntactic templates,” which are repeated Part-of-Speech patterns that create structural monotony. The models learn these templates from their training data and then reproduce them constantly because they’re statistically common patterns in published text.

How to identify it:

Read three consecutive paragraphs in your draft out loud. If they all start the same way or follow the exact same internal beat (such as opening statement → elaboration → transition to next topic), you’re dealing with templatedness, which is one of the key slop dimensions identified in research.

How to fix it:

Deliberately vary your paragraph structures and use logical connectors that actually match your reasoning, rather than falling back on formulaic transitions.

Replace boring phrases like “Additionally, companies should consider X” with contextual connectors like “When budget allows, X becomes a viable next step” or “The data from the previous section suggests X, which means your immediate priority should be Y.” Each transition should earn its place by moving the logic forward.

Must Read: A Guide for Freelancing with AI in 2026 and Beyond

6) Templatedness (Copy-and-Paste Patterns, List Spam)

What it looks like:

You’ll see bullet point lists where every single point is essentially the same idea expressed with slightly different words: “1) Increase customer engagement, 2) Boost audience interaction, 3) Enhance user participation, 4) Improve community involvement.” All four of those bullets say exactly the same thing.

Why does it happen?

AI models are trained on massive amounts of listicle-heavy content from blogs and marketing sites, so they default to this bulleted structure because it appears frequently in their training data. The model doesn’t evaluate whether the points are actually distinct. It just knows lists are statistically common.

How to identify it:

Try this test: see if you can merge 3 of your bullet points into one sentence without losing any meaningful information. If you can, your list is padded with redundancy. Professional annotators in research studies consistently flagged this kind of list padding as structural slop.

How to fix it:

Merge redundant points ruthlessly until each bullet contains a genuinely distinct idea. Even better, transform generic lists into worked examples or decision trees that help readers apply the information.

Take a look at this example: “If your engagement rate is currently below 2%, your immediate priority should be video content. If you’re already above 5%, your content mix is working, so focus on optimizing posting times instead of overhauling your format.”

Such text gives readers a clear path based on their specific situation.

7) “Nobody’s Home Vibe” (Fluent but Emotionally Empty)

What it looks like:

This is text that’s grammatically perfect and reads smoothly, but contains absolutely zero lived experience, emotional stakes, or personal opinion. It is technically correct yet completely devoid of personality or substance. You can read an entire paragraph and come away feeling like no actual human being was involved in creating it.

Why does it happen?

AI fundamentally lacks embodied human experience. It can mimic patterns it’s seen in training data. Still, it can’t access what one writer called “the emotional memories linked to particular sounds or sentence structures” that human writers unconsciously draw from when they write. The AI has never done the work, felt the frustration, or experienced the breakthrough it’s describing.

How to identify it:

The research taxonomy includes tone and bias/subjectivity as distinct indicators of slop in AI-generated text. When necessary, human perspective and emotional texture are missing from content that should contain them, the text feels hollow and generic, even if it’s technically accurate.

How to fix it:

Systematically add what I call the “I did / I saw / I learned / I recommend” layer to your AI-generated text. This should transform generic statements into experience-based insights.

Here’s an example:

- Before (AI slop): “Project management tools help teams stay organized and meet deadlines more effectively.”

- After (human voice): “I switched our team to Notion last year after watching three consecutive projects miss their deadlines in Asana. The database view was the game-changer because it finally let us see dependency blockers across all workstreams in real time.”

Notice how the revision proves you’ve actually used the tool and learned something specific from that experience.

8) Loop Conclusions (Repeating the Intro to Pad Word Count)

What it looks like:

These are conclusions that simply rephrase your introduction without adding any new insight, next steps, or useful takeaways. Content creators have identified this as pure word-count padding that delivers zero value to the reader.

Why does it happen?

LLMs are trained on thousands of published articles that follow the traditional structure of “tell them what you’ll tell them, tell them, then tell them what you told them.” So the models default to creating summary conclusions that restate earlier content, because that pattern is statistically common in their training data.

How to fix it:

Require every conclusion section to add at least one of these four elements:

- a concrete next step the reader should take,

- a decision checklist they can use,

- a specific tradeoff they should watch for, or

- a key metric they should track going forward.

For example, instead of ending an email marketing article with “Email marketing remains an important channel for businesses,” write something useful: “Test one new subject line format each week for the next month. Track your open rates in a simple spreadsheet, and you’ll identify your specific audience’s preference pattern within 30 days.”

This gives readers something actionable to do next.

9) Coherence Breaks (Smooth Sentences, Jumbled Logic)

What it looks like:

Individual sentences read perfectly fine when you look at them one by one, but when you zoom out to the paragraph or section level, the overall flow feels disjointed and confusing. You’ll finish reading a section and find yourself wondering, “Wait, why was that point even here? How does it connect to what came before?”

Why does it happen?

AI generates text sequentially based on the most recent tokens (words), without maintaining a substantial understanding of the global logical arc or argument structure of the entire piece. It’s optimizing locally (this sentence following that sentence) rather than globally (does this entire section build a coherent argument).

Research has noted that coherence is extremely hard to measure with automatic tools, but human readers reliably detect when it’s broken.

How to identify it:

After you’ve finished drafting a section, try this reverse-engineering test: outline the piece backward by writing down what each paragraph actually says in one sentence. If your reverse-engineered outline reveals logical gaps, random topic jumps, or missing connections between ideas, you’ve got coherence problems.

How to fix it:

Apply a strict “topic sentence + supporting proof + clear takeaway” structure to every single paragraph. Then, once you’ve tightened each paragraph internally, reorder your sections aggressively until the logic flows in a clear sequence: problem identification → evidence that proves the problem → implications of that evidence → concrete solution.

Don’t be afraid to cut sections that don’t fit into that logical chain, no matter how well-written they are individually.

10) Unnecessary Complexity / Buzzword Fog

What it looks like:

This shows up as jargon-heavy writing that obscures simple ideas behind unnecessarily complex language.

You’ll see sentences like: “Leverage synergistic touchpoints to optimize stakeholder engagement across the customer journey.” The actual meaning? “Use multiple communication channels to reach your audience.”

The complex version doesn’t make you sound smarter. It just makes your reader work harder for no reason.

Why does it happen?

Models trained heavily on corporate communications and academic papers tend to overweigh complex vocabulary and business jargon, mistaking that complexity for professionalism or expertise. The research taxonomy specifically includes word complexity as a measurable slop indicator.

How to identify it:

Run your draft through a free readability checker like Hemingway Editor or the Flesch-Kincaid tool.

If your content is scoring above a 12th-grade reading level when you’re writing for a general business audience, you need to simplify. Most professional content should hit somewhere between the 7th and 9th grade reading level for maximum accessibility and comprehension.

How to fix it:

Systematically replace industry jargon with the actual vocabulary your client or their customers use in real conversations. Shorten your sentences intentionally. Most importantly, use concrete examples instead of abstract concepts.

For example, a sentence like, “Send a welcome email, a detailed case study, and a 3-minute product demo video spread across the customer’s first week” is infinitely clearer and more useful than “Deploy a multi-touch onboarding sequence designed to optimize conversion velocity.”

11) Overproduction Signals (Too Much Content, Too Little Review)

What it looks like:

Content that feels noticeably rushed, with inconsistent quality that varies dramatically from one section to another within the same piece.

The telltale warning sign: you’re publishing 10 or more pieces per week, and they all have the exact same voice, tone, and structural patterns.

Why does it happen?

AI slop is fundamentally about volume over quality. It is about the ease of mass-producing content without proper quality gates or human review.

AI exacerbates the slop problem by removing friction in content production,” making it trivially easy to publish at volumes that would have been impossible before.

How to identify it:

Ask yourself honestly: are you shipping content faster than you can carefully review it?

Engagement metrics collapse rapidly when volume overtakes thoughtful curation and editing. If you’re spending more time generating with AI than editing, you’re at high risk.

How to fix it:

Deliberately publish less, but ship stronger quality work. Add a mandatory quality control gate to your process. For example, every single piece must pass at least 8 out of your 11 slop checks before you release it to the client or publish it.

For client work specifically, I now use the 10-80-10 rule: 10% of the time goes into human strategy and briefing, 80% into AI drafting and structuring, and the final 10% into rigorous humanization and fact-checking. This workflow lets me ship projects 2–3x faster while maintaining the total quality control that keeps clients coming back.

To find out more on the 10-80-10 rule, read this article: Before You Use AI for Freelance Writing, You MUST Do This (The 2026 Survival Protocol)

My “No-Slop” Client Workflow

Here’s the four-stage process I use to keep AI writing checklist-ready and client-safe for paid projects.

Step 1: Anti-Slop Brief Inputs

Before you even touch the AI tool, take time to document these critical elements:

- Your target audience’s specific role, their main pain points, and their decision-making criteria

- The 3–5 must-answer questions this piece needs to address

- Forbidden claims, that is, anything you can’t personally verify with a source

- Required specificity level: Will you need case studies, hard metrics, templates, or screenshots?

This front-loading ensures you’re building relevance and information density requirements into the project from the very beginning.

Step 2: Constrained AI Drafting

Always generate a detailed outline first. Never let the AI freeform draft without structure. I maintain complete control over the argument structure and section flow, while the AI fills in the individual sections based on my outline.

If the AI is making editorial decisions, it’s slop.

Step 3: Verification Pass

Every single factual claim in your draft needs to go through a source check. Every statistic requires a working link or a screenshot of where you found it. This verification step catches dangerous hallucinations before any client ever sees them.

Step 4: Human Voice Pass

Go through the draft and deliberately add your personal opinions, small imperfections, and specific examples from real experience. Plan to replace 20–30% of the AI-generated sentences with your own lived experience and perspective.

The imperfections, such as the slightly rambling sentence or the informal phrasing, are features that signal authentic human authorship, not bugs to eliminate.

People Also Read: My Freelance AI Writing Workflow That Doubles Output and Income

Step 5: Final QA Rubric

Score each piece on the six research taxonomy dimensions using a simple 1–5 scale:

| Dimension | Minimum passing threshold |

|---|---|

| Density | At least 3 concrete specifics per 200 words |

| Relevance | Every section must advance the brief’s core questions |

| Factuality | Zero unverifiable claims allowed |

| Structure | No more than 2 identical transition phrases in sequence |

| Coherence | Reverse outline must make logical sense |

| Tone | Contains clear personal voice markers |

If any dimension scores below your minimum threshold, revise that section before you deliver the work.

People Also Read: How to Make Money with AI: The Ultimate & Proven Beginner’s Guide

Conclusion

You can identify AI slop in writing by systematically checking for these 11 research-backed red flags.

The consensus among both content creators and academic researchers is absolutely clear: AI should augment and enhance human creativity, not replace it entirely.

Clients pay premium rates for specificity, verifiable claims, and authentic human perspective, which are precisely the qualities that AI slop text lacks.

Your competitive advantage isn’t going to be speed of production. Instead, it’s going to be your ability to ship AI-assisted text content that consistently passes rigorous client-safe quality checks. Use this checklist, enforce the workflow, and you’ll turn risky AI drafts into polished work that earns you contract renewals instead of revision requests.

What’s one slop red flag you’ll check first in your very next AI draft?

Start there, and build the habit one piece at a time.

Frequently Asked Questions (FAQs): How to Tell If AI-Generated Text Content Is Bad

-

How do I quickly spot AI copywriting mistakes?

Watch for low information density, with vague, reusable statements instead of specifics; formulaic transitions like “Additionally” and “Furthermore” used repeatedly; hedging language such as “it’s crucial to consider” that avoids taking a stance; and missing verifiable sources for factual claims.

Research has shown that these patterns correlate strongly with slop judgments from professional editors. -

Can AI detectors reliably identify slop?

No, they cannot. AI detection tools are designed to flag AI authorship rather than content quality, and they produce high false positive rates on human-written text. AI text slop is fundamentally about value and usefulness, not about who or what created it.

Even advanced models achieved low precision when trying to extract slop spans from text. Your time is better spent editing for the taxonomy dimensions rather than running detection tools. -

What’s the fastest way to edit AI-generated text content for client work?

Use the four-stage workflow outlined above: enforce specific brief requirements upfront before drafting, create drafts using detailed outlines rather than freeform generation, verify every factual claim with sources, and then add personal voice and concrete examples in a dedicated editing pass.

Budget approximately 40% of your total project time for this human refinement stage regarding your AI-generated text.